2020 Mid-Year Core Deposit Intangibles Update

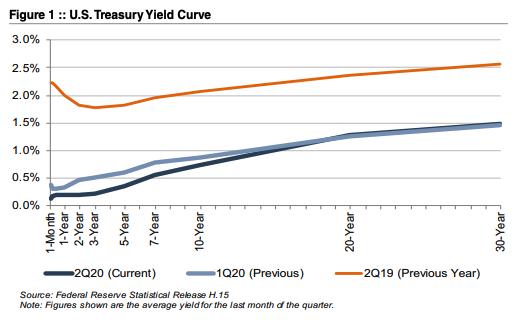

In our last update regarding core deposit trends published in October 2019, we described a decreasing trend in core deposit intangible asset values in light of falling interest rates. As a result, the interest-rate environment was not positive for core deposit valuations before COVID. Less than six months later, the World Health Organization declared the coronavirus outbreak a pandemic, and the world changed drastically. In response, the Fed cut rates to effectively zero, and the yield on the benchmark 10-year Treasury reached a record low. While many factors are pertinent to analyzing a deposit base, a significant driver of value is market interest rates. In a world of cut-rate alternative wholesale funding, core deposits have lost some of their luster.

M&A Activity

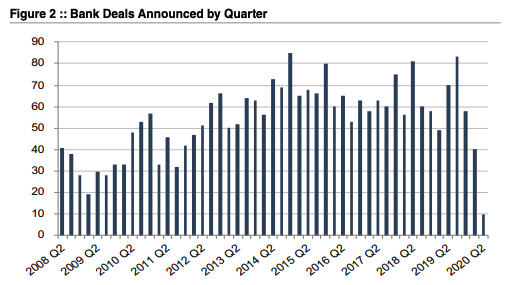

As shown in Figure 2, only ten whole-bank transactions were announced in the second quarter of 2020, representing the fewest deals announced during a quarter over the time period analyzed. For comparison, the first quarter of 2009 posted the second lowest figure with 19 announced deals. It seems unlikely that deal activity will fully recover in the near future. There are several issues hindering deal activity:

- Credit quality. Until there is more certainty surrounding credit quality, it will be difficult to analyze a target’s loan portfolio. According to S&P Global Market Intelligence, deferrals peaked at an average of approximately 14% of community banks’ overall loan portfolios. On one hand, borrower payments have continued for most loans, even for some borrowers who were granted pandemic-related deferrals. However, the Paycheck Protection Program (“PPP”) has expired and supplemental unemployment benefits are ending while unemployment remains high.

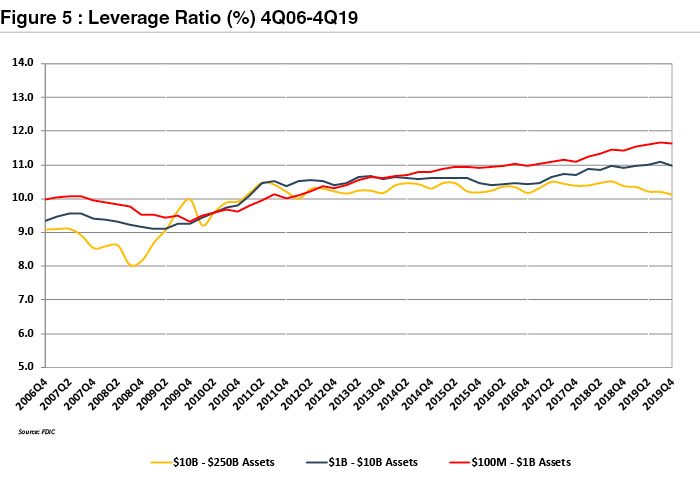

- Due diligence constraints. It remains functionally difficult to complete due diligence in an environment where travel may be unsafe, if not entirely prohibited.

- General uncertainty. It is unclear what the fall will bring – both from a pandemic perspective and from a policy perspective as it pertains to November elections. The future is very cloudy on what type of recovery to expect.

- Low demand for core deposits. Alternative cost of funding is low and banks are generally flush with liquidity at the moment. According to S&P Global Market Intelligence, the average loan to deposit ratio has fallen to a 29-year low, and the Federal Reserve’s H.8 indicates that deposit balances increased over 21% in the past four quarters. The bulk of the increase is attributable to transaction accounts rather than higher-cost time deposits. Consumer depositors are nervous about the next few months and are hesitant to tie up funds, and commercial depositors are hesitant to invest in new projects given the economic uncertainty.

On the other hand, there are a couple of factors that might cause some banks to resume M&A discussions more quickly.

- Margin compression. Net interest margin continues to compress nationwide, a trend that is expected to continue for the foreseeable future. Loan rates are dropping faster than deposit rates and pandemic-related charge-offs will begin to materialize soon. Deals can offer operational efficiencies that many banks will need.

It could be difficult for some banks to produce acceptable shareholder returns if margins fall as much as many expect. - Potential reversal of deposit influx. Many PPP recipients seem to be saving their funds in deposit accounts as a hedge against economic uncertainty. As these funds are deployed for payroll or other expenses, the savings trend could reverse. It is possible that banks will be hesitant to reduce deposit rates further due to fear of deposit outflows or in anticipation of better loan demand as the economy recovers.

Trends in CDI Values

Using data compiled by S&P Global Market Intelligence, we analyzed trends in core deposit intangible (CDI) assets recorded in whole bank acquisitions completed from 2000 through June 30, 2020. CDI values represent the value of the depository customer relationships obtained in a bank acquisition. CDI values are driven by many factors, including the “stickiness” of a customer base, the types of deposit

accounts assumed, and the cost of the acquired deposit base compared to alternative sources of funding. For our analysis of industry trends in CDI values, we relied on S&P Global Market Intelligence’s definition of core deposits.1 In analyzing core deposit intangible assets for individual acquisitions, however, a more detailed analysis of the deposit base would consider the relative stability of various account types. In general, CDI assets derive most of their value from lower-cost demand deposit accounts, while often significantly less (if not zero) value is ascribed to more rate-sensitive time deposits and public funds, or to non-retail funding sources such as listing service or brokered deposits which are excluded from core deposits when determining the value of a CDI.

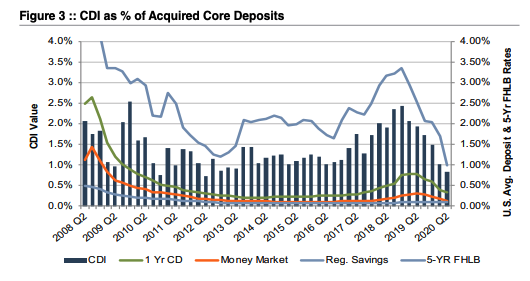

Figure 3 summarizes the trend in CDI values since the start of the 2008 recession, compared with rates on 5-year FHLB advances. Over the post-recession period, CDI values have largely followed the general trend in interest rates—as alternative funding became more costly in 2017 and 2018, CDI values generally ticked up as well, relative to post-recession average levels. Throughout 2019, CDI values exhibited a declining trend in light of yield curve inversion and Fed cuts to the target federal funds rate during the back half of 2019. This trend was exacerbated in March 2020 when rates were effectively cut to zero.

The average CDI value declined 110 basis points from June 2019 to June 2020 while the five-year FHLB advance declined 157 basis points over the same period. The decline in CDI values has been somewhat slower than the drop in benchmark interest rates, in part, because deposit costs typically lag broader movements in market interest rates. For example, the average money market deposit rate and the average one-year CD rate declined 14 basis points and 43 basis points, respectively, over the same one-year period. In general, banks were slow to raise deposit rates in the period of contractionary monetary policy through 2018 and, as a result, rates remain below benchmark levels leaving banks less room to reduce rates further. For CDs, the lagging trend is even more pronounced. Now that rates are on the decline, banks have been stuck with CDs that cannot be repriced until their maturities even as benchmark rates fall. While time deposits typically are not considered “core deposits” in an acquisition and thus would not directly influence CDI values, they do significantly influence a bank’s overall cost of funds.

Average CDI values have fallen for the past six consecutive quarters. Since early 2008 CDI values have averaged 1.45% of deposits, meaningfully lower than long-term historical levels which averaged closer to 2.5-3.0% in the early 2000s.

Trends in Deposit Premiums Relative to CDI Asset Values

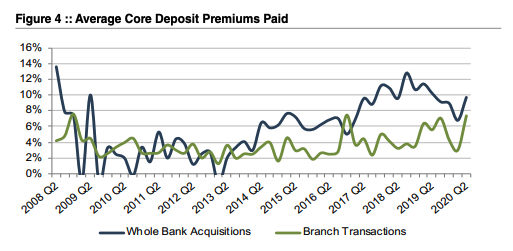

Core deposit intangible assets are related to, but not identical to, deposit premiums paid in acquisitions. While CDI assets are an intangible asset recorded in acquisitions to capture the value of the customer relationships the deposits represent, deposit premiums paid are a function of the purchase price of an acquisition. Deposit premiums in whole bank acquisitions are computed based on the excess of the purchase price over the target’s tangible book value, as a percentage of the core deposit base. While deposit premiums often capture the value to the acquirer assuming the established funding source of the core deposit base (that is, the value of the deposit franchise), the purchase price also reflects factors unrelated to the deposit base, such as the quality of the acquired loan portfolio, unique synergy opportunities anticipated by the acquirer, etc. Additional factors may influence the purchase price to an extent that the calculated deposit premium doesn’t necessarily bear a strong relationship to the value of the core deposit base to the acquirer. This influence is often less relevant in branch transactions where the deposit base is the primary driver of the transaction and the relationship between the purchase price and the deposit base is more direct. Figure 4 presents deposit premiums paid in whole bank acquisitions as compared to premiums paid in branch transactions.

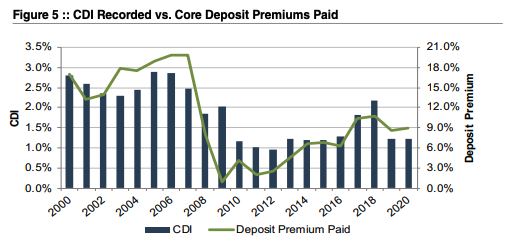

As shown in Figure 5, deposit premiums paid in whole bank acquisitions have shown more volatility than CDI values. Despite improved deal values prior to the second half of 2019, deposit premiums in the range of 7% to 10% remain well below the pre-Great Recession levels when premiums for whole bank acquisitions averaged closer to 20%.

Deposit premiums paid in branch transactions have generally been less volatile than tangible book value premiums paid in whole bank acquisitions. Branch transaction deposit premiums averaged in the 4.5%-7.5% range during 2019, up from the 2.0-4.0% range observed in the financial crisis. Only five branch transactions were completed in the first half of 2020, but the range of their implied premiums is in line with 2019 levels. There have been several branch transactions announced since the onset of the pandemic; however, pricing information is not available for any of those transactions.

Accounting For CDI Assets

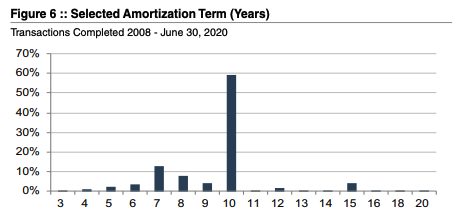

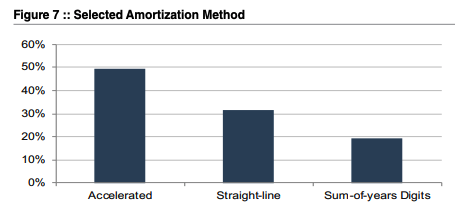

Based on the data for acquisitions for which core deposit intangible detail was reported, a majority of banks selected a ten-year amortization term for the CDI values booked. Less than 10% of transactions for which data was available selected amortization terms longer than ten years. Amortization methods were somewhat more varied, but an accelerated amortization method was selected in approximately half of these transactions.

For more information about Mercer Capital’s core deposit valuation services, please contact us.

Originally appeared in Mercer Capital’s Bank Watch, August 2020.

Valuation Considerations in Bankruptcy Proceedings

The outbreak of COVID-19 in the United States has caused a severe public health crisis and an unprecedented level of economic disruption. While some economic activity is beginning to come back, predictions for longer-term negative economic impacts have also become more prevalent.

The initial thoughts of a quick V-shaped economic recovery have been replaced in all but the most optimistic minds with a more nuanced consideration of how this situation will impact businesses within different industries and geographic areas over the next several years.

In some of the most hard-hit industries, we are already seeing what is expected to be a prolonged surge in corporate restructurings and bankruptcy filings.

The decision to file for bankruptcy does not necessarily signal the demise of the business. If executed properly, Chapter 11 reorganization affords a financially distressed or insolvent company an opportunity to restructure its liabilities and emerge from the proceedings as a viable going concern. Along with a bankruptcy filing (more typically before and/or in preparation for the filing), the company usually undertakes a strategic review of its operations, including opportunities to shed assets or even lines of business. During the reorganization proceeding, stakeholders, including creditors and equity holders, negotiate and litigate to establish economic interests in the emerging entity. The Chapter 11 reorganization process concludes when the bankruptcy court confirms a reorganization plan that both specifies a reorganization value and reflects the agreed upon strategic direction and capital structure of the emerging entity.

In addition to fulfilling technical requirements of the bankruptcy code and providing adequate disclosure, two characteristics of a reorganization plan are germane from a valuation perspective1 :

- The plan should demonstrate that the economic outcomes for any consenting stakeholders are superior under the Chapter 11 proceeding compared to a Chapter 7 proceeding, which provides for more direct relief through a liquidation of the business. This is generally referred to as the “best interests test.”

- The plan should demonstrate that, upon confirmation by the bankruptcy court, it will not likely result in liquidation or further reorganization of the business. This is generally referred to as the “cash flow test.”

Finally, upon emerging from bankruptcy, companies are required to apply “fresh start” accounting, under which all assets of the company, including identifiable intangible assets, are recorded on the balance sheet at fair value.

Best Interest Test

Within this context of a best interests test, valuation specialists can provide useful financial advice to:

- Establish the value of the business under a Chapter 7 liquidation premise.

- Measure the reorganization value of a business, which, absent liquidation, represents the economic “pie” from which stakeholder claims can be satisfied. A plan confirmed by a bankruptcy court should establish a reorganization value that exceeds the value of the company under a liquidation premise.

A Floor Value: Liquidation Value

If a company can no longer pay its debts and does not restructure, it will undergo Chapter 7 liquidation. The law generally mandates that Chapter 11 restructuring only be approved if it provides a company’s creditors with their highest level of expected repayment. The Chapter 11 restructuring plan must be in the best interest of the creditors (relative to Chapter 7 liquidation) in order for it to be approved. Given this understanding of the law, the first valuation step in successful Chapter 11 restructuring is assessing the alternative, liquidation value. This value will be a threshold that any reorganization plan must outperform in order to be accepted by the court.

The first valuation step in successful Chapter 11 restructuring is assessing the alternative, liquidation value

The value in liquidating a business is unfortunately not as simple as finding the fair market value, or even a book value for all the assets. The liquidation premise generally contemplates a sale of the company’s assets within a short period. Any valuation must account for the fact that inadequate time to place the assets in the open market means that the price obtained is usually lower than the fair market value.

Everyone has seen the “inventory liquidation sale” sign or the “going out of business” sign in the shop window. Experience tells us that the underlying “marketing period” assumptions made in a liquidation analysis can have a material impact on the valuation conclusion.

From a technical perspective, liquidation value can occur under three sub-sets: assemblage of assets, orderly liquidation, and forced liquidation. As implied, these are asset-based approaches to valuation that differ in their assumptions surrounding the marketing period and manner in which the assets are disposed. There are no strict guidelines in the bankruptcy process related to these three sub-sets; bankruptcy courts generally determine the applicable premise of value on a case by case basis.

The determination (and support) of the appropriate premise can be an important component to the best interests test.

In general, the discount from fair market value implied by the price obtainable under a liquidation premise is related to the liquidity of an asset. Accordingly, valuation analysts often segregate the assets of the petitioner company into several categories based upon the ease of disposal.

Liquidation value is estimated for each category by referencing available discount benchmarks. For example, no haircut would typically be applied to cash and equivalents while less liquid assets (such as accounts receivable or inventory) would likely incur potentially significant discounts. For some assets categories, the appropriate level of discount can be estimated by analyzing the prices commanded in the sale of comparable assets under a similarly distressed sale scenario.

Reorganization Value

Once an accurate liquidation value is established, the next step is determining whether the company can be reorganized in a way that provides more value to a company’s stakeholders than discounted asset sales. ASC 852 defines reorganization value as2:

The value attributable to the reconstituted entity, as well as the expected net realizable value of those assets that will be disposed of before reconstitution occurs. This value is viewed as the value of the entity before considering liabilities and approximates the amount a willing buyer would pay for the assets of the entity immediately after restructuring.

Typically the “value attributable to the reconstituted entity,” the new enterprise value for the restructured business, is the largest element of the total reorganization value. Unlike a liquidation, this enterprise value falls under what valuation professionals call a “going concern” value premise. This means that the business is valued based on the return that would be generated by the future operations of the emerging, restructured entity and not what one would be paid for selling individual assets.

To measure enterprise value … reorganization plans primarily use a type of income approach – the discounted cash-flow (DCF) method

The intangible elements of going concern value result from factors such as having a trained work force, a loyal customer base, an operational plant, and the necessary licenses, systems, and procedures in place. To measure enterprise value in this way, reorganization plans primarily use a type of income approach, the discounted cash-flow (DCF) method. The DCF method estimates the net present value of future cash-flows that the emerging entity is expected to generate. Implementing the discounted cash-flow methodology requires three basic elements:

- Forecast of Expected Future Cash Flows. Guidance from management can be critical in developing a supportable cash-flow forecast. Generally, valuation specialists develop cash flow forecasts for discrete periods that may range from three to ten years. Conceptually, one would forecast discrete cash flows for as many periods as necessary until a stabilized cash-flow stream can be anticipated. Due to the opportunity to make broad strategic changes as part of the reorganization process, cash flows from the emerging entity must be projected for the period when the company expects to execute its restructuring and transition plans. Major drivers of the cash flow forecast include projected revenue, gross margins, operating costs and capital expenditure requirements. The historical experience of the petitioner company, as well as information from publicly traded companies operating in similar lines of business can provide reference points to evaluate each element of the cash-flow forecast.

- Terminal Value. The terminal value captures the value of all cash flows after the discrete forecast period. Terminal value is determined by using assumptions about long-term cash flow growth rate and the discount rate to capitalize cash flow at the end of the forecast period. This means that the model takes the cash flow value for the last discrete year, and then grows it at a constant rate for perpetuity. In some cases the terminal value may be estimated by applying current or projected market multiples to the projected results in the last discrete year. An average EV/EBITDA of comparable companies, for instance, might be used to find a likely market value of the business at that date.

- Discount Rate. The discount rate is used to estimate the present value of the forecasted cash flows. Valuation analysts develop a suitable discount rate using assumptions about the costs of equity and debt capital, and the capital structure of the emerging entity. Costs of equity capital are usually estimated by utilizing a build-up method that uses the long-term risk-free rate, equity risk premia, and other industry or company-specific factors as inputs. The cost of debt capital and the likely capital structure may be based on benchmark rates on similar issues and the structures of comparable companies. Overall, the discount rate should reasonably reflect the operational and market risks associated with the expected cash-flows of the emerging entity.

The sum of the present values of all the forecasted cash flows, including discrete period cash flows and the terminal value, provides an indication of the business enterprise value of the emerging entity for a specific set of forecast assumptions.

The reorganization value is the sum of that expected business enterprise value of the emerging entity and proceeds from any sale or other disposal of assets during the reorganization.

Since the DCF-determined part of this value relies on so many forecast assumptions, different stakeholders may independently develop distinct estimates of the reorganization value to facilitate negotiations or litigation.

The eventual confirmed reorganization plan, however, reflects the terms agreed upon by the consenting stakeholders and specifies either a range of reorganization values or a single point estimate.

In conjunction with the reorganization plan, the courts also approve the amounts of allowed claims or interests for the stakeholders in the restructuring entity. From the perspective of the stakeholders, the reorganization value represents all of the resources available to meet the post-petition liabilities (liabilities from continued operations during restructuring) and allowed claims and interests called for in the confirmed reorganization plan.

If this agreed upon reorganization value exceeds the value to the stakeholders of the liquidation, then there is only one more valuation hurdle to be cleared: a cash flow test. This is an examination of whether the restructuring creates a company that will be viable for the long term—that is not likely to be back in bankruptcy court in a few years

Cash Flow Test

For a company that passes the best interest test, this second requirement represents the last valuation hurdle to successfully emerging from Chapter 11 restructuring. Within the context of a cash flow test, valuation specialists can demonstrate the viability of the emerging entity’s proposed capital structure, including debt amounts and terms given the stream of cash flows that can be reasonably expected from the business. The cash flow test essentially represents a test of the company’s current and projected future financial solvency.

Even if a company shows that the restructuring plan will benefit stakeholders relative to liquidation, the court will still reject the plan if it is likely to lead to liquidation or further restructuring in the foreseeable future. To satisfy the court, a cash flow test is used to analyze whether the restructured company would generate enough cash to consistently pay its debts. This cash flow test can be broken into three parts.

The cash flow test essentially represents a test of the company’s current and projected future financial solvency

The first step in conducting the cash flow test is to identify the cash flows that the restructured company will generate. These cash flows are available to service all the obligations of the emerging entity. A stream of cash flows is developed using the DCF method in order to determine the reorganization value. Thus, in practice, establishing the appropriate stream of cash flows for the cash flow test is often a straightforward matter of using these projected cash flows in the new model.

Once the fundamental cash flow projections are incorporated, analysts then model the negotiated or litigated terms attributable to the creditors of the emerging entity. This involves projecting interest and principal payments to the creditors, including any amounts due to providers of short term, working capital facilities. These are the payments for each period that the cash flow generated up to that point must be able to cover in order for the company to avoid another bankruptcy.

The cash flows of the company will not be used only to pay debts, and so the third and final step in the cash flow test is documenting the impact of the net cash flows on the entire balance sheet of the emerging entity. This entails modeling changes in the company’s asset base as portions of the expected cash flows are invested in working capital and capital equipment; and modeling changes in the debt obligations of and equity interests in the company as the remaining cash flows are disbursed to the capital providers.

A reorganization plan is generally considered viable if such a detailed cash-flow model indicates solvent operations for the foreseeable future. The answer, however, is typically not so simple as assessing a single cash flow forecast.

It is a rare occurrence when management’s base case forecast does not pass the cash flow test. The underpinnings of the entire reorganization plan are based on this forecast, so it is almost certain that the cash flow projections have been produced with an eye toward meeting this requirement.

Viability is proven not only by passing the cash flow test on a base case scenario but also maintaining financial viability under some set of reasonable projections in which the company (or industry, or general economy) underperforms the base level of expectations. This “stress-testing” of the company’s financial projection is a critical component of a meaningful cash flow test.

“Fresh Start” Accounting”

Companies emerging from Chapter 11 bankruptcy are required to re-state their balance sheets to conform to the reorganization value and plan.

- On the left side of the balance sheet, emerging companies need to allocate the reorganization value to the various tangible and identifiable intangible assets the post-bankruptcy company owns. To the extent the reorganization value exceeds the sum of the fair value of individual identifiable assets, the balance is recorded as goodwill.

- On the right side of the balance sheet, the claims of creditors are re-stated to conform to the terms of the reorganization plan.

Implementing “fresh start” accounting requires valuation expertise to develop reasonably accurate fair value measurements.

Conclusion

Although the Chapter 11 process can seem burdensome, a rigorous assessment of cash flows and a company’s capital structure can help the company as it develops a plan for years of future success.

We hope that this explanation of the key valuation-related steps of a Chapter 11 restructuring helps managers realize this potential. However, we also understand that executives going through a Chapter 11 restructuring process need to juggle an extraordinary set of additional responsibilities—evaluating alternate strategies, implementing new and difficult business plans, and negotiating with various stakeholders.

Given executives’ multitude of other responsibilities, they often decide that it is best to seek help from outside, third party specialists.

Valuation specialists can relieve some of the burden from executives by developing the valuation and financial analysis necessary to satisfy the requirements for a reorganization plan to be confirmed by a bankruptcy court

Valuation specialists can relieve some of the burden from executives by developing the valuation and financial analysis necessary to satisfy the requirements for a reorganization plan to be confirmed by a bankruptcy court. Specialists can also provide useful advice and perspective during the negotiation of the reorganization plan to help the company emerge with the best chance of success.

With years of experience advising companies on valuation related issues, Mercer Capital’s professionals are well positioned to help in both of these roles. For a confidential conversation about your company’s current financial position and how we might assist in your bankruptcy related analyses, please contact a Mercer Capital professional.

1Accounting Standards Codification Topic 852, Reorganizations (“ASC 852”). ASC 852-05-8.

2ASC 852-10-20.

Estate Planning Opportunities in the Current Environment

Download the slide deck here.

In this July 21, 2020 webcast, Travis W. Harms of Mercer Capital and Brook H. Lester of Diversified Trust share their insights with family business directors and their advisors.

As family business leaders tackle the many operating challenges thrust upon them by the COVID-19 pandemic, it is tempting to let tasks like estate planning fall to the bottom of the to-do list. While estate planning may appear to be less pressing than other issues, the positive impact of effective planning on the long-term health of both the family and the family business is hard to overstate.

If your family business is overdue for some ownership transitions, you should not miss the current opportunity for tax-efficient estate planning transfers.

The Importance of the Valuation Date in Divorce

During the divorce process, a listing of assets and liabilities, often referred to as a marital balance sheet or marital estate, is established for the purpose of dividing assets between the divorcing parties. Some assets are easily valued, such as a brokerage account or retirement, which hold marketable securities with readily available prices. Other assets, such as a business or ownership interest in a business, are not as easily valued and require the expertise of a business appraiser. Upon retaining a valuation or financial expert, together with the family law attorney, it is important to understand and agree upon certain factors that set forth a baseline for the valuation. These may be state specific, such as case precedent and state statute. One of these considerations is the valuation date, which differs from state to state.

Valuation Date Defined

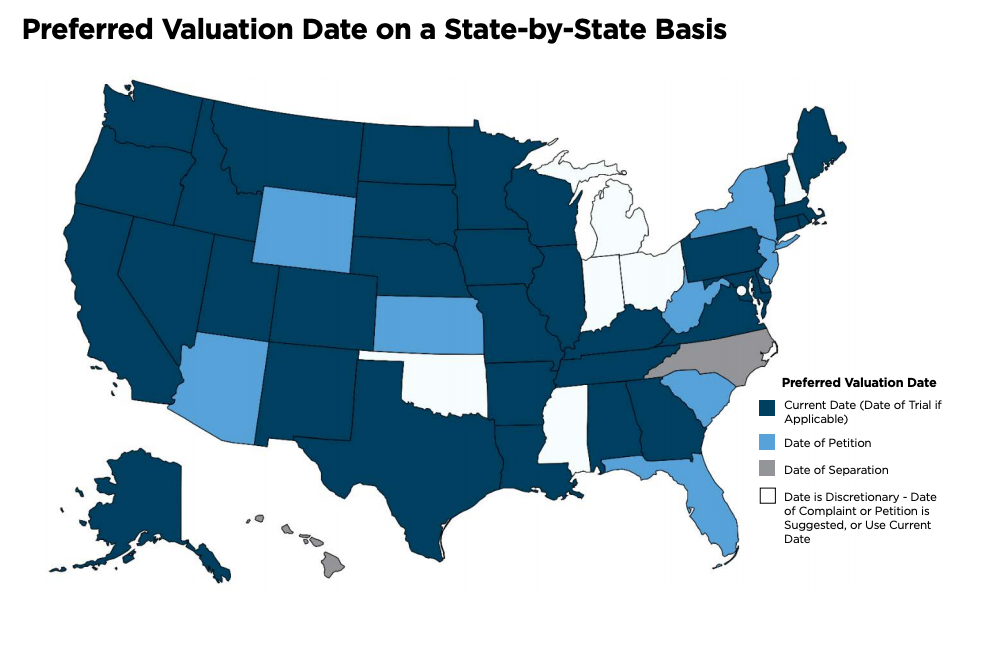

The valuation date represents the point in time at which the business, or business interest(s), is being valued. The majority of states have adopted the use of a current date, usually as close as possible to mediation or trial date. Other states use date of separation or the date the divorce complaint/petition was filed. See the map on page 2 for a preferred valuation date summary by state (note that the summary may be modifiable for recent updates in state precedent).

Those states that use date of separation or date of complaint/ petition as the valuation date face a bit of “noise” and complexity when the divorce process becomes lengthy and/or when there are significant impacts to the economy and/or industry in which that business operates.

As an example, consider the timeframe from December 31, 2019 to now, Summer 2020, and the economic reverberation of COVID-19. A valuation as of these two dates will look quite different due to changes in actual business performance as well as shifts in future expectations/outlooks for the business and its industry. However, this is not only a consideration for those states which use date of separation or date of petition. This is also an important consideration for matters which have extended over a prolonged period. It is also critical for current matters – we are all aware that much has transpired since December 31, 2019 – as that valuation date may no longer accurately reflect the overall picture of the business, necessitating a secondary valuation, or alternatively, an update to the prior valuation.

Let’s take a deeper dive at understanding the importance of valuation date as it relates to the divorce process.

Why Does the Valuation Date Matter?

Laws differ state to state regarding valuation date and standard of value (generally fair market value or fair value). There are other nuances related to the business valuation for divorce process, such as premise of value which is often a going-concern value as opposed to a liquidation value. After the standard of value, premise of value, and the valuation date have been established, the business appraiser must then incorporate relevant known and knowable facts and circumstances at the date of valuation when determining a valuation conclusion. These facts and trends are reflected in historical financial performance, anticipated future operations, and industry/economic conditions and can fluctuate depending on the date of valuation.

Using our prior example, the conditions of Summer 2020 are vastly different than year-end 2019 due to COVID-19. For many businesses, actual performance financial performance in 2020 has been materially different than what was expected for 2020 during December 2019 budgeting processes. The current environment has made the facts and circumstances in anticipated future budget(s), both short-term and long-term, even more meaningful. The income approach reflects the present value of all future cash flows. So, even if a business is performing at lower levels today, that may not necessarily be a permanent impact, particularly if rebound is anticipated. Thus, that value today may be impacted by a short-term decrease in earnings; however, an anticipated future rebound will also impact the valuation today.

It must be pointed out that it would be incorrect to consider the impact of COVID-19 for a valuation date prior to approximately March 2020, as the economic impact of the pandemic was not reasonably known or knowable prior to that date. Therefore, the valuation date is meaningful and a significant consideration in any valuation process, and especially in current conditions. A state that typically requires a date of separation may consider consensus among parties to update to a more recent date as much has changed between then and now.

How Have Valuations Been Affected by COVID-19?

Valuations of any privately held company involve the understanding and consideration of many factors. We try to avoid absolutes in valuations such as always and never. The true answer to the question of how have valuations of privately held companies been affected by the coronavirus is “It Depends.”

- It depends on what industry the business operates in and how that industry has been impacted (whether negatively or positively) by COVID-19 conditions.

- It depends on where the subject company is geographically as we are seeing timing impacts from openings/closures differ throughout the country and globally.

- It depends on what markets the subject company serves. As we have seen and are continuing to see across the country, the stay-at-home restrictions have varied greatly from state to state and certain areas have been more severely affected than other. Certain industries, such as airlines, hospitality, retail, and restaurants, have been far more impacted than other industries.

As a general benchmark, the overall performance of the stock market from the beginning of 2020 until now can serve as a guide. The stock market has been volatile since the March global impact from COVID-19 began to unfold. Specific indicators of each subject company, such as actual performance and the economic/industry conditions relative to their geographic footprint, also govern the impact of any potential change in valuation.

Valuation Date Considerations for Lengthy Processes

The valuation date for purposes of business valuation for marital dissolution is an important issue, even in times without the current COVID-19 conditions. Consider matters that extend into multiple years from time of separation to time of divorce decree. Has the value of the business changed during this time? If the answer is yes, or maybe, another consideration for some clients may be related to the cost of another valuation. However, the importance of an accurate and timely valuation should far outweigh the concerns of additional expense to update a conclusion.

It is important to discuss these elements with your expert as the process may depend on the length of time which has transpired since the original valuation and the facts and circumstances of the business/economy/industry. Your expert will be able to determine if an acceptable update may be simply updating prior calculations; however, if much has changed, such as expectations for the future performance of the business, the approach may involve a secondary valuation using a current date of valuation.

Another consideration to keep in mind: depending on jurisdiction, state law may deem the value of the business after separation but before divorce as separate property. If this is the case, two valuation dates are necessary.

Concluding Thoughts

The litigation environment is complex and already rife with doom and gloom expectations. We have previously written about the phenomenon referred to as divorce recession in family law engagements. Understanding the valuation date of an asset valuation, such as a privately held business, for marital dissolution is an important consideration, especially for matters which have extended over a lengthy time and those that may be impacted by significant global events such as COVID-19. Speak to your valuation expert when these matters arise. The already complex process of business valuation becomes even more complex with the passing of time and also in the midst of economic uncertainty.

Cautionary Tales of Valuation Adjustments in Litigation

Valuations of a closely held business in the context of litigation such as in a contentious divorce, shareholder dispute, damages matter or other litigated corporate matter can be multifaceted and may require additional forensic investigative scrutiny. As such, it is important to consider the potential forensic implications of valuation adjustments as they may lead to other analyses. For example, in a divorce business appraisal, a valuation adjustment for discretionary (personal) expenses may, in turn, provide an implied “true income” and pay ability of a spouse. This is but one example – this session addresses other examples you will likely face.

Presented at the NACVA 2020 Business Valuation and Financial Litigation Virtual Super Conference.

Stress Testing and Capital Planning for Banks and Credit Unions During the COVID-19 Pandemic

A stress test is defined as a risk management tool that consists of estimating the bank’s financial position over a time horizon – approximately two years – under different scenarios (typically a baseline, adverse, and severe scenario).

The concept of stress testing for banks and credit unions is akin to the human experience of going in for a check up and running on a treadmill so your cardiologist can measure how your heart performs under stress. Similar to stress tests performed by doctors, stress tests for financial institutions can ultimately improve the health of the bank or credit union (“CU”).

The benefits of stress testing for financial institutions include:

- Enhancing strategic/capital planning

- Improving risk management

- Enhancing the value and earning power of the bank or credit union

As many public companies in other industries have pulled earnings guidance due to the uncertainty surrounding the economic outlook amid the coronavirus pandemic, community banks and CUs do not have that luxury. Key stakeholders, boards, and regulators will desire a better understanding of the ability of the bank or CU to withstand the severe economic shock of the pandemic. Fortunately, stress testing has been a part of the banking lexicon since the last global financial crisis began in 2008. We can leverage many lessons learned from the last decade or so of this annual exercise.

Conducting a Stress Test

It can be easy to get overwhelmed when faced with scenario and capital planning amidst the backdrop of a global pandemic with a virus whose path and duration is ultimately uncertain. However, it is important to stay grounded in established stress testing steps and techniques.

Below we discuss the four primary steps that we take to help clients conduct a stress test in light of the current economic environment.

Step 1: Determine the Economic Scenarios to Consider

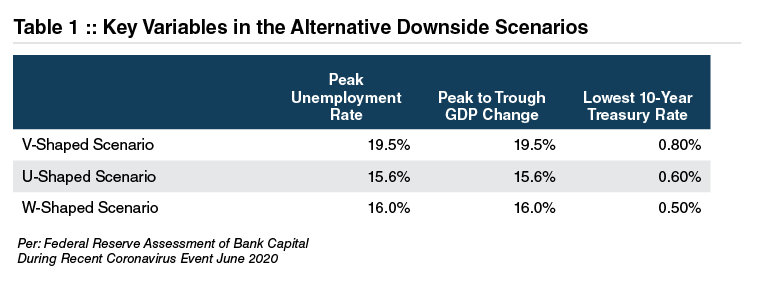

It is important to determine the appropriate stress event to consider. Unfortunately, the Federal Reserve’s original 2020 scenarios published in 1Q2020 seem less relevant today since they forecast peak unemployment at 10%, versus the recent peak national unemployment rate of 14.7% (April 2020). However, the Federal Reserve supplemented the original scenario with a sensitivity analysis for the 2020 stress testing round related to coronavirus scenarios in late 2Q20, which provides helpful insights.

The Federal Reserve’s sensitivity analysis had three alternative downside

scenarios:

- A rapid V-shaped recovery that regains much of the output and employment lost by year-end 2020

- A slower, more U-shaped recovery in which only a small share of lost output and employment is regained in 2020

- A W-shaped double dip recession with a short-lived recovery followed by a severe drop in late 2020 due to a second wave of COVID

Some of the key macroeconomic variables in these scenarios are found in Table 1.

In our view, these scenarios provide community banks and credit unions with economic scenarios from which to begin a sound stress testing and capital planning framework.

Step 2: Segment the Loan Portfolio and Estimate Loan Portfolio Stress Losses

While determining potential loan losses due to the uncertainty from a pandemic can be particularly daunting, we can take clues from the Federal Reserve’s release of results in late 2Q20 from some of the largest banks. While the specific loss rates for specific banks weren’t disclosed, the Fed’s U, V, and W sensitivity analysis noted that aggregate loss rates were higher than both the Global Financial Crisis (“GFC”) and the Supervisory Capital Assessment Program (“SCAP”) assumptions from the prior downturn.

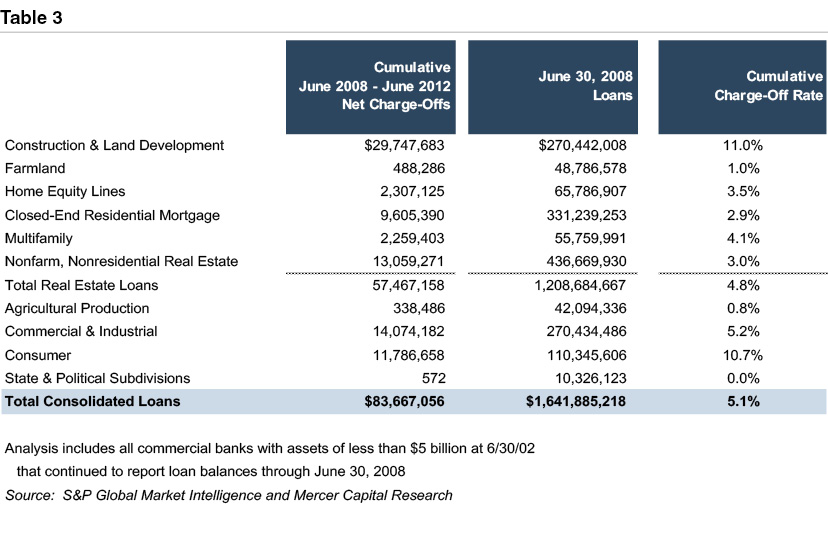

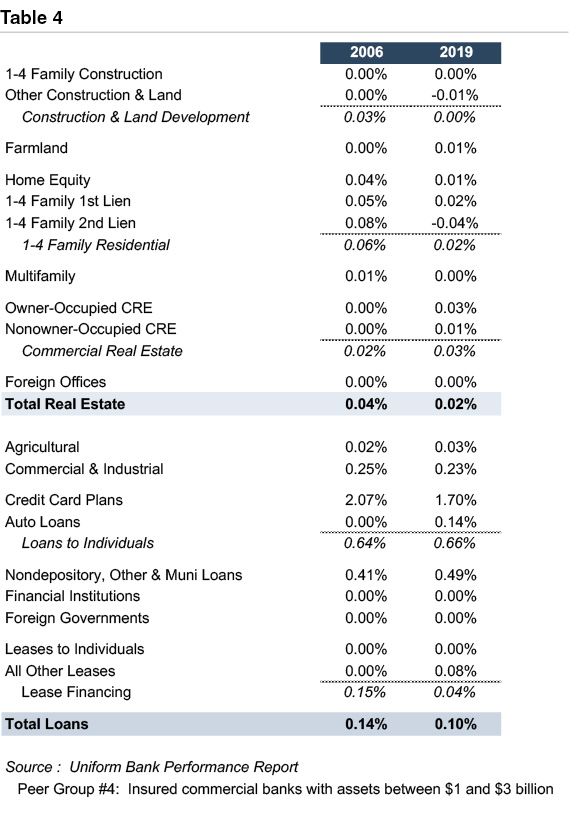

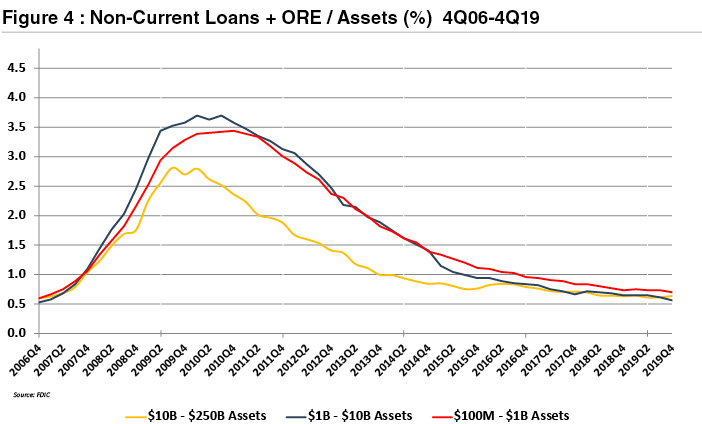

We note that many community banks and CUs may feel that their portfolios in aggregate will weather the COVID storm better than their larger counterparts (data provided in Table 2). We have previously noted that community bank loan portfolios are more diverse now than during the prior downturn and cumulative charge-offs were lower for community banks as a whole than the larger banks during the GFC. For example, cumulative charge-offs for community banks over a longer distressed time period during the GFC (four years, or sixteen quarters, from June 2008 – June 2012) were 5.1%, implying an annual charge-off rate during a stressed period of 1.28% (which is ~42% of what larger banks experienced during the GFC). However, we also note that this community bank loss history is likely understated by the survivorship bias arising from community banks that failed during the GFC.

Each community bank or CU’s loan portfolio is unique, and it will be important for community banks and CUs to document the composition of their portfolio and segment the portfolio appropriately. Segmentation of the loan portfolio will be particularly important. The Fed noted that certain sectors will behave differently during the COVID downturn. The leisure, hospitality, tourism, retail, and food sectors are likely to have higher credit risk during the pandemic. Proper loan segmentation should include segmentation for higher risk industry sectors during the current pandemic as well as COVID-modified/restructured loans.

Once the portfolio is segmented, loss history over an extended period and a full business cycle (likely 10-12 years of history) will be important to assess. While the current pandemic is a different event, this historical loss experience can be leveraged to provide insights into future prospects and underwriting strength during a downturn and relative to peer loss experience.

In certain situations, it may also be relevant to consider the correlation between those historical losses and certain economic factors such as the unemployment rate in the institution’s market areas. For example, a regression analysis can determine which variables were most significant statistically in driving historical losses during prior downturns and help determine which variables may be most relevant in the current pandemic. For those variables deemed statistically significant, the regression analysis can also provide a forecasting tool to estimate and/or test the reasonableness of future loss rates based on assumed changes in those variables that may be above and beyond historical experience.

Lastly, higher risk loan portfolio segments (such as those in more economically exposed sectors) and larger loans that were modified during the pandemic may need to be supplemented by some “bottom-up” analysis of certain loans to determine how these credits may fare in the different economic scenarios previously described. To the extent losses can be modeled for each individual loan, these losses can be used to estimate losses for those particular loans and also leveraged to support assumptions for other loan portfolio segments.

Step 3: Estimate the Impact of Stress on Earnings

Step 3 expands the focus beyond just the loan portfolio and potential credit losses from the pandemic modeled in Step 2 and focuses on the institution’s “core” earning power and sensitivity of that over the economic scenarios modeled in Step 1.

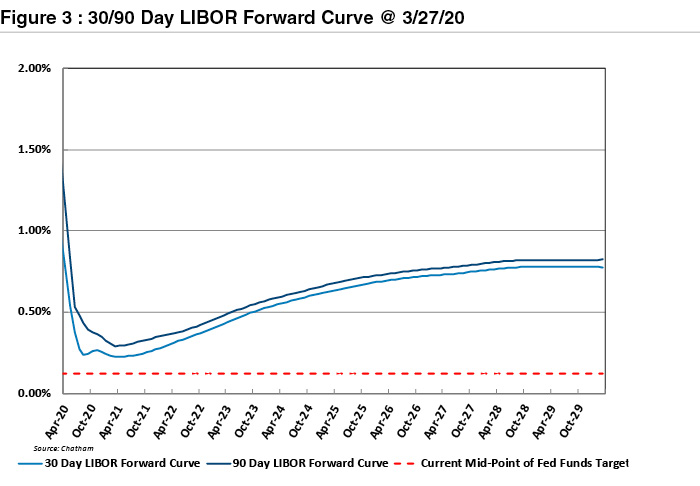

When assessing “core” earning power, it is important to consider the potential impact of the economic scenarios on the interest rate outlook and net interest margins (“NIM”). While the outlook is uncertain, Federal Reserve rate cuts have already started to crimp margins.

Beyond the headwinds brought about by the pandemic, it is also important to consider any potential tailwinds in certain countercyclical areas like mortgage banking, PPP loan income, and/or efficiency brought about by greater adoption of digital technology and cost savings from branch closures. Ultimately, the earnings model over the stressed period relies on key assumptions that need to be researched, explained, and supported related to NIM, earning assets, non-interest income, expenses/efficiency, and provision expense in light of the credit losses modeled in Step 2.

Step 4: Estimate the Impact of Stress on Capital

Step 4 combines all the work done in Steps 1, 2, and 3 and ultimately models capital levels and ratios over the entirety of the forecast periods (which is normally nine quarters) in the different economic scenarios.

Capital at the end of the forecast period is ultimately a function of capital and reserve levels immediately prior to the stressed period plus earnings or losses generated over the stressed period (inclusive of credit losses and provisions estimated). When assessing capital ratios during the pandemic period, it is important to also consider the impact of any strategic decisions that may help to alleviate stress on capital during this period, such as raising sub-debt, eliminating distributions or share repurchases, and slowing balance sheet growth. For perspective, the results released from the Federal Reserve suggested that under the V, U, and W shaped alternative downside scenarios, the aggregate CET1 capital ratios were 9.5%, 8.1%, and 7.7%, respectively.

What Should Your Bank or Credit Union Do with the Results?

What your bank or credit union should do with the results depends on the institution’s specific situation. For example, assume that your stress test reveals a lower exposure to certain economically exposed sectors during the pandemic and some countercyclical strengths such as mortgage banking/asset management/

PPP revenues. This helps your bank or CU maintain relatively strong and healthy performance over the stressed period in terms of capital, asset quality, and earnings performance. This performance could allow for and support a strategic/capital plan involving the continuation of dividends and/or share repurchases, accessing capital and/or sub-debt for growth opportunities, and proactively looking at ways to grow market share both organically and through potential acquisitions during and after the pandemic-induced downturn. For those banks and CUs that include M&A in the strategic/capital plan over the next two years, improved stress testing capabilities at your institution should assist with stress testing the target’s loan portfolio during the due diligence process.

Alternatively, consider a bank that is in a relatively weaker position. In this case, the results may provide key insight that leads to quantifying the potential capital shortfall, if any, relative to either regulatory minimums or internal targets. After estimating the shortfall, management can develop an action plan, which could entail seeking additional common equity, accessing sub-debt, selling branches or higher-risk loan portfolios to shrink the balance sheet, or considering potential merger partners. Integrating the stress test results with identifiable action plans to remediate any capital shortfall can demonstrate that the bank’s existing capital, including any capital enhancement actions taken, is adequate in stressed economic scenarios.

How Mercer Capital Can Help

A well-reasoned and documented stress test can provide regulators, directors, and management the comfort of knowing that capital levels are adequate, at a minimum, to withstand the pandemic and maintain the dividend. A stress test can also support other strategies to enhance shareholder value, such as a share buyback plan, higher dividends, a strategic acquisition, or other actions to take advantage of the disruption caused by the pandemic. The results of the stress test should also enhance your bank or credit union’s decision-making process and be incorporated into strategic planning and the management of credit risk, interest rate risk, and capital.

Having successfully completed thousands of engagements for financial institutions over the last 35 years, Mercer Capital has the experience to solve complex financial issues impacting community banks and credit unions during the ups and downs of economic cycles. Mercer Capital can help scale and improve your institution’s stress testing in a variety of ways. We can provide advice and support for assumptions within your bank or credit union’s pre-existing stress test. We can also develop a unique, custom stress test that incorporates your institution’s desired level of complexity and adequately captures the unique risks you face.

Regardless of the approach, the desired outcome is a stress test and capital plan that can be used by managers, directors, and regulators to monitor capital adequacy, manage risk, enhance the bank’s performance, and improve strategic decisions.

For more information on Mercer Capital’s Stress Testing and Capital Planning solutions, contact Jay Wilson at wilsonj@mercercapital.com.

Originally appeared in Mercer Capital’s Bank Watch, July 2020.

A “Grievous” Valuation Error: Tax Court Protects Boundaries of Fair Market Value in Grieve Decision

All fair market determinations involve assumptions regarding how buyers and sellers would behave in a transaction involving the subject asset. In a recent Tax Court case, the IRS appraiser applied a novel valuation rationale predicated on transactions that would occur involving assets other than the subject interests being valued. In its ruling, the Court concluded that this approach transgressed the boundaries of what may be assumed in a valuation.

Background

At issue in Grieve was the fair market value of non-voting Class B interests in two family LLCs.

- The first, Rabbit, owned a portfolio of marketable securities having a net asset value of approximately $9 million.

- The second, Angus, owned a portfolio of cash, private equity investments, and promissory notes having a net asset value of approximately $32 million.

Both Rabbit and Angus were capitalized with Class A voting and Class B non-voting interests. The Class A voting interests comprised 0.2% of the total economic interest in each entity. The Class A voting interests were owned by the taxpayer’s daughter, who exercised control over the investments and operations of the entities.

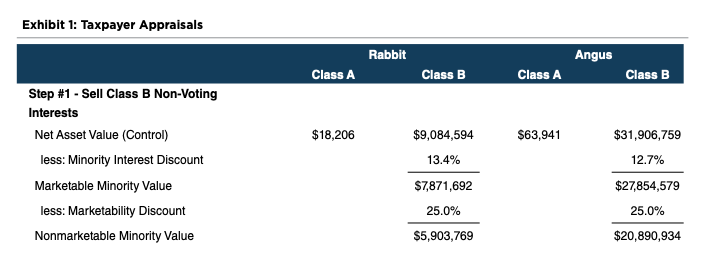

Valuation Conclusion – Taxpayer

The taxpayer measured the fair market value of the Class B non-voting interests using commonly accepted methods for family LLCs.

- The net asset value of each LLC was deemed to represent the value on a controlling interest basis.

- Since the subject Class B non-voting interests did not possess control over either entity, the net asset value was reduced by a minority interest discount. The taxpayer estimated the magnitude of the minority interest discount with reference to studies of minority shares in closed end funds.

- Unlike the minority shares in closed end funds, there was no active market for the Class B non-voting interests in Rabbit and Angus. As a result, the taxpayer applied a marketability discount to the marketable minority indication of value. The taxpayer estimated the marketability discount with reference to restricted stock studies.

The combined valuation discount applied to the Class B nonvoting interests was on the order of 35% for both Rabbit and Angus, as shown in Exhibit 1.

Valuation Conclusion – IRS

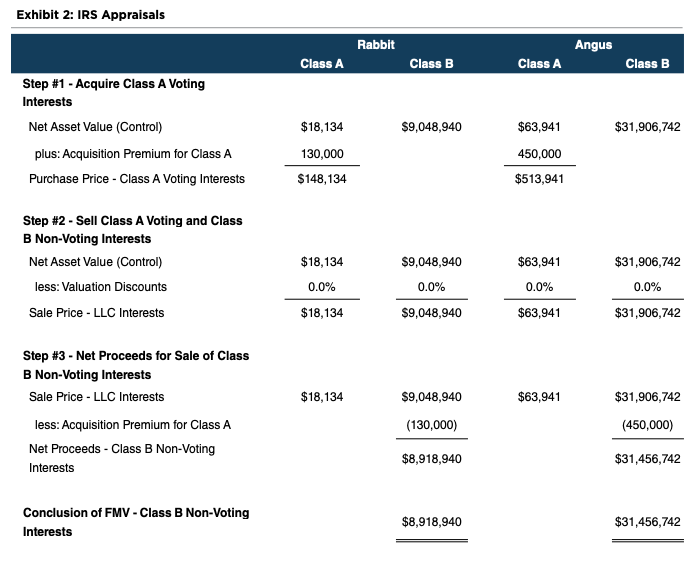

The IRS adopted a novel approach for determining the fair market value of the Class B non-voting interests.

Noting the disparity in economic interests between the Class A voting (0.2%) and Class B non-voting interests (99.8%), the IRS concluded that a hypothetical willing seller of the Class B non-voting interest would sell the subject interest only after having first acquired the Class A voting interest. Having done so, the owner of the class B non-voting interest could then sell both the Class A voting and Class B nonvoting interests in a single transaction, presumably for net asset value.

If the dollar amount paid of the premium paid for the Class A voting interest is less than the aggregate valuation discount applicable to the Class B non-voting interest, the hypothesized series of transactions would yield more net proceeds than simply selling the Class B non-voting interest by itself. The sequence of transactions assumed in the IRS determination of fair market value is summarized in Exhibit 2.

Tax Court Conclusion

It is certainly true that – if the Class A voting interests could, in fact, be acquired at the proposed prices – the sequence of transactions assumed by the IRS yield greater net proceeds for the owner of the subject Class B non-voting interests than a direct sale of those interests. However, is the assumed sequence of transactions proposed by the IRS consistent with fair market value?

The Tax Court concluded that the IRS valuation over-stepped the bounds of fair market value. The crux of the Court’s reasoning is summarized in a single sentence from the opinion: “We are looking at the value of the Class B Units on the date of the gifts and not the value of the class B units on the basis of subsequent events that, while within the realm of possibilities, are not reasonably probable, nor the value of the class A units.” Citing a 1934 Supreme Court decision (Olson), the Tax Court notes that “[e]lements affecting the value that depend upon events within the realm of possibility should not be considered if the events are not shown to be reasonably probable.” In view of the fact that (1) the owner of the Class A voting interests expressly denied any willingness to sell the units, (2) the speculative nature of the assumed premiums associated with purchase of those interests, and (3) the absence of any peer review or caselaw support for the IRS valuation methodology, the Tax Court concluded that the sequence of transactions proposed by the IRS were not reasonably probable. As a result, the Tax Court rejected the IRS valuations.

The Grieve decision is a positive outcome for taxpayers. In addition to affirming the propriety of traditional valuation approaches for minority interests in family LLCs, the decision clarified the boundaries of fair market value, rejecting a novel valuation approach that assumes specific attributes of the subject interest of the valuation that do not, in fact, exist. As the Court concluded, fair market value is determined by considering the motivations of willing buyers and sellers of the subject asset, and not the willing buyers and sellers of other assets.

Originally appeared in Value Matters™, Issue No. 3, 2020

Does Your Bank Need an Interim Impairment Test Due to the Economic Impact of COVID-19?

Analysts and pundits are debating whether the economic recovery will be shaped like a U, V, W, swoosh, or check mark and how long it may take to fully recover. To find clues, many are following the lead of the healthcare professionals and looking to Asia for economic and market data since these economies experienced the earliest hits and recoveries from the COVID-19 pandemic.

Taking a similar approach led me to take a closer look at the Japanese megabanks for clues about how U.S. banks may navigate the COVID-19 crisis. In Japan, the banking industry is grappling with similar issues as U.S. banks, including the need to further cut costs; expanding branch closures; enhancing digital efforts; bracing for a tough year as bankruptcies rise; and looking for acquisitions in faster growing markets.

Another similarity is impairment charges. Two of the three Japanese megabanks recently reported impairment charges. Mitsubishi UFJ Financial Group (MUFG) reported a ¥343 billion impairment charge related to two Indonesian and Thai lenders that MUFG owned controlling interests in and whose share price had dropped ~50% since acquisition. Mizuho Financial Group incurred a ¥39 billion impairment charge.

In the years since the Global Financial Crisis, there have not been many goodwill impairment charges recognized by U.S. banks. A handful of banks including PacWest (NASDAQ-PACW) and Great Western Bancorp (NYSE-GWB) announced impairment charges with the release of 1Q20 results. Both announced dividend reductions, too.

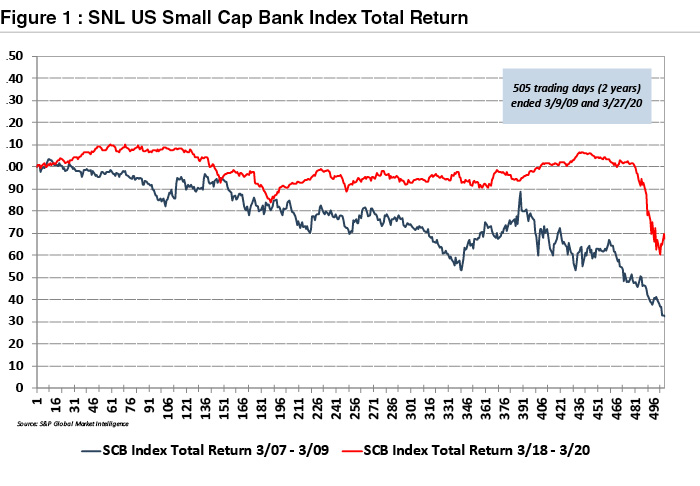

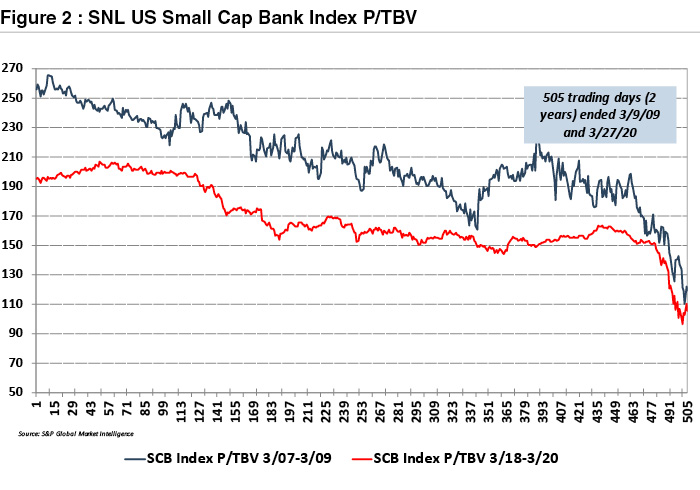

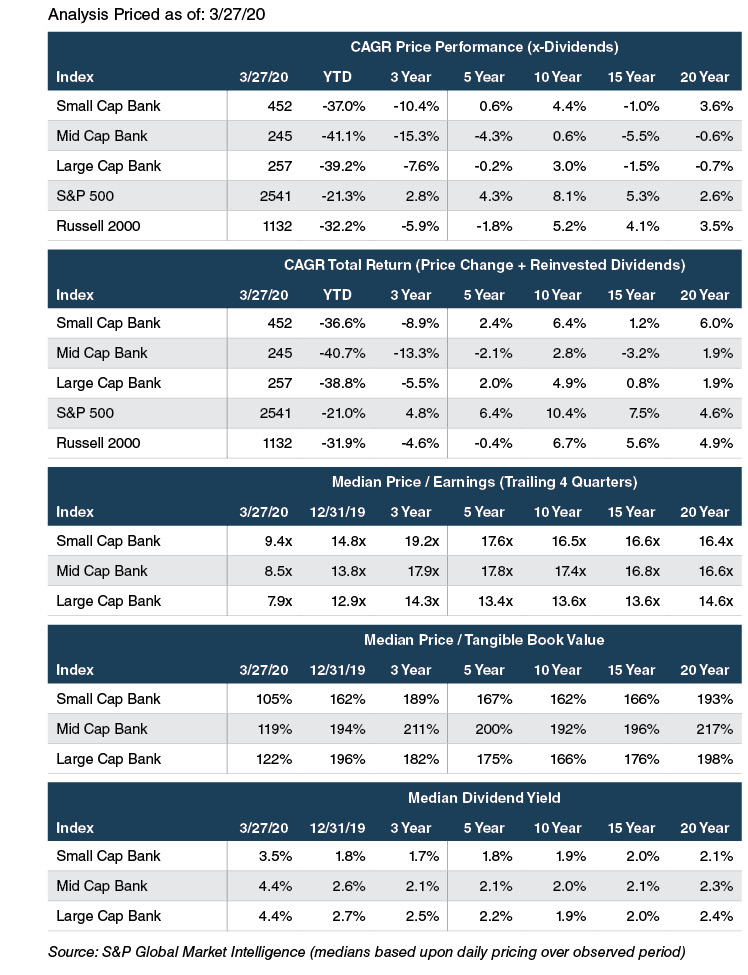

Absent a rebound in bank stocks, more goodwill impairment charges likely will be recognized this year. Bank stocks remain depressed relative to year-end pricing levels despite some improvements in May and early June. For perspective, the S&P 500 Index was down ~5% from year-end 2019 through May 31, 2020 compared to a decline of ~32% for the SNL Small Cap Bank Index and ~34% for the SNL Bank Index.

This sharper decline for banks reflects concerns around net interest margin compression, future credit losses, and loan growth potential. The declines in the public markets mirrored similar declines in M&A activity and several bank transactions that had previously been announced were terminated before closing with COVID-19 impacts often cited as a key factor.

Price discovery from the public markets tends to be a leading indicator that impairment charges and/or more robust impairment testing is warranted. The declines in the markets led to multiple compression for most public banks and the majority have been priced at discounts to book value since late March. At May 31, 2020, ~77% of publicly traded community banks (i.e., having assets below $5B) were trading at a discount to their book value with a median of ~83%. Within the cohort of banks trading below book value at May 31, 2020, ~74% were trading below tangible book value.

Do I Need an Impairment Test?

Goodwill impairment testing is typically performed annually. But the unprecedented events precipitated by the COVID-19 pandemic now raise questions whether an interim goodwill impairment test is warranted.

The accounting guidance in ASC 350 prescribes that interim goodwill impairment tests may be necessary in the case of certain “triggering” events. For public companies, perhaps the most easily observable triggering event is a decline in stock price, but other factors may constitute a triggering event. Further, these factors apply to both public and private companies, even those private companies that have previously elected to amortize goodwill under ASU 2017-04.

For interim goodwill impairment tests, ASC 350 notes that management should assess relevant events and circumstances that might make it more likely than not that an impairment condition exists. The guidance provides several examples, several of which are relevant for the bank industry including the following:

- Industry and market considerations such as a deterioration in the environment in which an entity operates or an increased competitive environment

- Declines in market-dependent multiples or metrics (consider in both absolute terms and relative to peers)

- Overall financial performance such as negative or declining cash flows or a decline in actual or planned revenue or earnings compared with actual and projected results of relevant prior periods

- Changes in the carrying amount of assets at the reporting unit including the expectation of selling or disposing certain assets

- If applicable, a sustained decrease in share price (considered both in absolute terms and relative to peers)

The guidance notes that an entity should also consider positive and mitigating events and circumstances that may affect its conclusion. If a recent impairment test has been performed, the headroom between the recent fair value measurement and carrying amount could also be a factor to consider.

How Does an Impairment Test Work?

Once an entity determines that an interim impairment test is appropriate, a quantitative “Step 1” impairment test is required. Under Step 1, the entity must measure the fair value of the relevant reporting units (or the entire company if the business is defined as a single reporting unit). The fair value of a reporting unit refers to “the price that would be received to sell the unit as a whole in an orderly transaction between market participants at the measurement date.”

For companies that have already adopted ASU 2017-04, the legacy “Step 2” analysis has been eliminated, and the impairment charge is calculated as simply the difference between fair value and carrying amount.

ASC 820 provides a framework for measuring fair value which recognizes the three traditional valuation approaches: the income approach, the market approach, and the cost approach. As with most valuation assignments, judgment is required to determine which approach or approaches are most appropriate given the facts and circumstances. In our experience, the income and market approaches are most used in goodwill impairment testing. However, the market approach is somewhat limited in the current environment given the lack of transaction activity in the banking sector post-COVID-19.

In the current environment, we offer the following thoughts on some areas that are likely to draw additional scrutiny from auditors and regulators.

- Are the financial projections used in a discounted cash flow analysis reflective of recent market conditions? What are the model’s sensitivities to changes in key inputs?

- Given developments in the market, do measures of risk (discount rates) need to be updated?

- If market multiples from comparable companies are used to support the valuation, are those multiples still applicable and meaningful in the current environment?

- If precedent M&A transactions are used to support the valuation, are those multiples still relevant in the current environment?

- If the subject company is public, how does its current market capitalization compare to the indicated fair value of the entity (or sum of the reporting units)? What is the implied control premium and is it reasonable in light of current market conditions?

At a minimum, we anticipate that additional analyses and support will be necessary to address these questions. The documentation from an impairment test at December 31, 2019 might provide a starting point, but the reality is that the economic and market landscape has changed significantly in the first half of 2020.

Concluding Thoughts

While not all industries have been impacted in the same way from the COVID-19 pandemic and economic shutdown, the banking industry will not escape unscathed given the depressed valuations observed in the public markets. For public and private banks, it can be difficult to ignore the sustained and significant drop in publicly traded bank stock prices and the implications that this might have on fair value and the potential for goodwill impairment.

At Mercer Capital, we have experience in implementing both the qualitative and quantitative aspects of interim goodwill impairment testing. To discuss the implications and timing of triggering events, please contact a professional in Mercer Capital’s Financial Institutions Group.

Originally published in Bank Watch, June 2020.

Request for Proposal

Mercer Capital is pleased to prepare a proposal for impairment testing services for your bank or bank holding company. Follow the link below to complete a submission.

Top Three Valuation Considerations for Credit Unions When Contemplating a Bank Acquisition

After five or six years of strong bank M&A activity, 2020 slowed drastically following the onset of COVID-19. Eventually, we expect M&A activity will rebound once buyers have more confidence in the economy and the COVID-19 medical outlook. In that case, there will be greater certainty around seller’s earnings outlook and credit quality, particularly for those loan segments more exposed in the post-COVID-19 economic environment.

The factors that drive consolidation such as buyers’ needs to obtain scale,

improve profitability, and support growth will remain as will seller desires to exit due to shareholder needs for liquidity and management succession among others.

Credit Unions as Bank Acquirers

One emerging trend prior to the bank M&A slowdown in March 2020 was credit unions (“CUs”) acquiring small community banks. Since January 1, 2015, there have been 36 acquisitions of banks by CUs of which 15 were announced in 2019.

In addition to the factors favoring consolidation noted above, credit unions can benefit from diversifying their loan portfolio away from a heavy reliance on consumers and into new geographic markets. In addition to diversification benefits, bank acquisitions can also enhance the growth profile of the acquiring CU.

From the first quarter of 2015 through the second quarter of 2019, CU bank buyers grew their membership by ~23% compared to ~15% for other CUs according to S&P Global Market Intelligence. A positive for community bank sellers is that CUs pay cash and often acquire small community banks located in small communities or even rural areas, that do not interest most large community and regional bank acquirers.

Valuation Issues to Consider When a Credit Union Acquires a Commercial Bank

There are, of course, unique valuation issues to consider when a credit union buys (or is bidding for) a commercial bank.

- Transaction Form and Consideration. Transactions are often structured as an asset purchase whereby the CU pays cash consideration to acquire the assets and assume the liabilities of the underlying bank.

- Taxes (CU Perspective). CUs do not pay corporate income taxes, and this precludes them from acquiring certain tax-related assets and liabilities on the bank’s balance sheet, such as a deferred tax asset.

- Taxes (Bank Perspective). If a holding company owns a bank that is sold to a CU, then any gain will likely be subject to taxation prior to the holding company satisfying any liabilities and paying a liquidating distribution to shareholders.

- Expense Synergies. CUs often extract less cost savings than a bank buyer because bank acquisitions are often viewed as part of their membership growth strategy whereby the transaction expands their geographic/membership footprint and there will be no or fewer branch closures.

- Capital Considerations. CUs must maintain a net worth ratio of at least 7.0% to be deemed “well capitalized” by regulators. The net worth ratio is akin to a bank’s leverage ratio and the pro-forma impact from the acquisition on the net worth ratio should be estimated as the increase in assets from the acquisition can reduce the post-close net worth ratio of the CU. Some CUs may be able to issue sub debt and count it as capital but CUs often rely primarily upon retained earnings to increase capital.

- Other. CU acquisitions can often take longer to close than traditional bank acquisitions and, thus, an interim forecast of earnings/distributions may need to be considered for both the bank and CU to better estimate the pro forma balance sheet at closing.

Valuation Considerations for Credit Unions When Contemplating Acquiring a Bank

Based upon our experience of working as the financial advisor to credit unions that are contemplating an acquisition of a bank, we see three broad factors CUs should consider.

Developing a Reasonable Valuation Range for the Bank Target

Developing a reasonable valuation for a bank target is important in any economic environment but particularly so in the post-COVID-19 environment. Generally, the guideline M&A comparable transactions and discounted cash flow (“DCF”) valuation methods are relied upon.

In the pre-COVID-19 environment, transaction data was more readily available so that one could tailor one or more M&A comp groups that closely reflected the target’s geographic location, asset size, financial performance, and the like. Until sufficient M&A activity resumes, timely and relevant market data is limited. Even when M&A activity resumes, inferences from historical data for CU deals should be made with caution because it is a small sample set of ~35 pre-COVID-19 deals where only 75% of announced deals since 2015 included pricing data with a wide P/TBV range of ~0.5x to ~1.7x (with a median of ~1.3x).

While deal values are often reported and compared based upon multiples of tangible book value, CU acquirers are like most bank acquirers in which value is a function of projected cash flow estimates that they believe the bank target can produce in the future once merged with their CU.

A key question to consider is: What factors drive the cash flow forecast and ultimately value? No two valuations or cash flow estimates are alike and determining the value for a bank or its branches requires evaluating both qualitative and quantitative factors bearing on the target bank’s current performance, outlook, growth potential, and risk attributes.

The primary factors driving value in our experience include considering both qualitative and quantitative factors. In a post-COVID-19 valuation, a CU may have a high degree of confidence in expense savings, but less so in other aspects of the forecast—especially related to growth potential, credit losses, and the net interest margin (“NIM”).

Developing Accurate Fair Value Estimates of the Loan Portfolio and Core Deposit Intangible

It is important for CUs to develop reasonable and accurate fair value estimates as these estimates will impact the pro forma net worth of the CU at closing as well as their future earnings and net worth. In the initial accounting for a bank acquisition by a CU, acquired assets and liabilities are marked to their fair values, with the most significant marks typically for the loan portfolio followed by depositor customer relationship (core deposit) intangible assets.

- Loan Valuation. The loan valuation process can be complex before factoring in the COVID-19 effect on interest rates and credit loss assumptions. Our loan valuation process begins with due diligence discussions with management of the target to understand their underwriting strategy as well as specific areas of concern in the portfolio. We also typically factor in the CU acquirer’s loan review personnel to obtain their perspective. The actual valuation often relies upon a) monthly cash flow forecasts considering both the contractual loan terms, as well as the outlook for future interest rates; b) prepayment speeds; c) credit loss estimates based upon qualitative and quantitative assumptions; and d) appropriate discount rates. Problem credits above a certain threshold are typically evaluated on an individual basis.

- Core Deposit Intangible Valuation. Core deposit intangible asset values are driven by both market factors (interest rates) and bank-specific factors such as customer retention, deposit base characteristics, and a bank’s expense and fee structure. We also assess market data regarding the costs of alternative funding sources, the forward rate curves, and the sensitivity of the acquired deposit base to changes in market interest rates. Simultaneously, we analyze the cost of the acquired deposits relative to the market environment, looking at current interest rates paid on the deposits as well as other expenses required to service the accounts and fee income that may be generated by the accounts. We analyze historical retention characteristics of the acquired deposits and the outlook for future account retention to develop a detailed forecast of the future cost of the acquired deposits relative to an alternative cost of funds.

Evaluating Key Deal Metrics to Model Strength or Weakness of Transaction

Once a valuation range is determined and the pro forma balance sheet is prepared, the CU can then begin to model certain deal metrics to assess the strength and weaknesses of the transaction. Many of the traditional metrics that banks utilize when assessing bank targets are also commonplace for CUs to evaluate and consider, including net worth (or book value) dilution and the earnback period, earnings accretion/dilution, and an IRR analysis. These and other measures usually are meaningfully impacted by the opportunity cost of cash allocated to the purchase and retention estimates for accounts and lines of business that may have an uncertain future as part of a CU.

One deal metric that often gets a lot of focus from CUs is the estimated internal rate of return (“IRR”) for the transaction based upon the following key items: the cash price for the acquisitions, the opportunity cost of the cash, and the forecast cash flows/valuation for the target inclusive of any expense savings and growth/attrition over time in lines of business. This IRR estimate can then be compared to the CU’s historical and/or projected return on equity or net worth to assess whether the transaction offers the potential to enhance pro forma cash flow and provide a reasonable return to the CU and its members. In our experience, an IRR estimate 200-500 basis points (2-5%) above the CUs historical return on equity (net worth) implies an attractive acquisition candidate.

Conclusion

Mercer Capital has significant experience providing valuation, due diligence, and advisory services to credit unions and community banks across each phase of a potential transaction. Our services for CUs include providing initial valuation ranges to CUs for bank targets, performing due diligence on targets during the negotiation phase, providing fairness opinions and presentations related to the acquisition to the CU’s management and/or Board, and providing valuations for fair value estimates of loans and core deposit prior to or at closing.

We also provide valuation and advisory services to community banks considering strategic options and can assist with developing a process to maximize valuation upon exit by including a credit union in the transaction process. Feel free to reach out to us to discuss your community bank or credit union’s unique situation in confidence.

Originally published in Bank Watch, May 2020.

What’s For Dinner? Pandemic Impacts on Restaurant Valuations and How Restaurant Operators are Navigating Soft Re-Openings

The National Restaurant Association’s Restaurant Performance Index for March dropped to its lowest level since the Index began in 2002. The 95.0 reading was down from 101.9 in February, with 100 being the separator between expansion and contraction. The RPI is equally weighted between the Current Situation Index (93.1) and the Expectations Index (97.0). The Expectations Index is usually the higher of the two and measures operators 6-month outlook, but it is telling that this figure is still below 100 looking out to September.

Coming into 2020, there was a growing chasm between the valuations of many restaurant brands that get significant franchise royalty revenues and their franchisee operating counterparts. Franchisee valuations have lagged as margins have been squeezed by factors including costly third-party delivery services. Perhaps these costs will be abated once this subindustry experiences some consolidation like Uber’s recently announced attempted takeover of Grubhub. Or maybe this will further squeeze the bottom line for restaurant operators as these delivery companies compete less. Either way, franchisors clipping royalties off the top of revenues have been protected, and their asset lite approach has streamlined operations. However, the success of this business model is premised on the idea that operator margins may be squeezed but their revenues will not materially decline. The demand shock created by COVID has burst the bubble on this investment thesis, which has brought down valuations for franchisors and operators alike. It will be interesting to see going forward how this impacts the separation between brand and operator as franchisees have long bemoaned expensive capex requirements that are a use of cash and may increase revenues and profits in unequal measure.

Restaurants with significant leverage (debt or other fixed costs such as rent not tied to a % of sales) are likely to fare worse than others. Brand may also play an increased role as consumers seek to “support local.” Stay-at-home orders have seen grocery bills increase and restaurant trips confined to takeout. In certain areas, restrictions have been eased including Nashville, where retail and restaurant businesses are allowed to open at 50% capacity as part of Phase One of the city’s reopening plan. While government’s allowing restaurants to reopen is the first step to returning to normalcy, there are many more steps to go. First, restaurants must be willing to reopen amidst concerns about staffing and profitability. For restaurants to be willing to open, they must believe customers will be willing to return as well.